心律失常的数据分析与模型分类项目

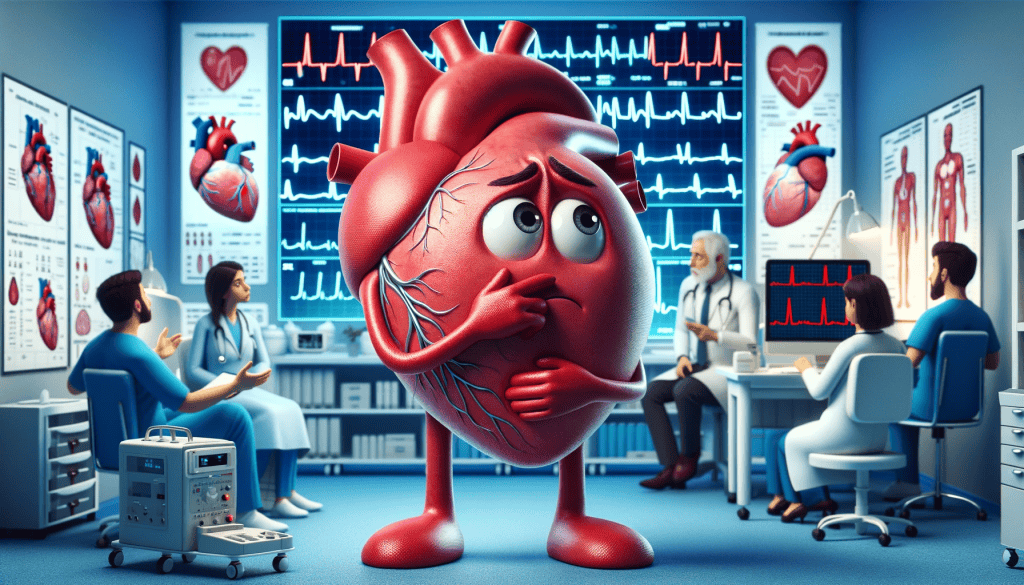

项目背景

心脏疾病一直是全球健康的主要挑战之一,它不仅影响着数百万人的生活质量,而且还是世界范围内主要的死因之一。近年来,随着数据科学和机器学习的迅猛发展,我们有了新的工具和方法来预测和诊断心脏疾病。在这个项目中,我们利用这些先进技术,尝试通过分析心电图(ECG)数据来识别心律失常,从而实现早期诊断和干预。

项目目标

本项目的主要目标是开发一个能够准确识别和分类心律失常的机器学习模型。通过对心电图数据的深入分析,我们希望能够识别出潜在的心脏问题,特别是冠状动脉疾病和Rjgbt束支传导阻滞这两种最常见的心律失常类型。此外,我们的目标还包括提高医疗专业人员的诊断效率和准确性,从而改善患者的治疗结果。

项目应用

该项目的潜在应用范围广泛,从临床诊断到远程医疗监控都可能受益。机器学习模型的实施可以帮助医生快速准确地诊断心律失常,特别是在资源有限或需要快速决策的情况下。此外,该模型还可以集成到可穿戴设备和智能手机应用中,为用户提供实时的心脏健康监控。

数据集描述

我们的数据集由452个独特的实例组成,这些实例分布在16个不同的类别中。其中,245个实例被标记为“正常”,其余的则涉及12种不同类型的心律失常。在所有这些心律失常类型中,最具代表性的是“冠状动脉疾病”和“Rjgbt束支传导阻滞”。

数据集包含279个特征,这些特征不仅涵盖了患者的基本信息,如年龄、性别、体重和身高,还包括了详细的心电图信息。这种高维度的特征集提供了丰富的信息,有助于我们更深入地理解心律失常的复杂性。

模型选择

在本项目中,我们选择了多种机器学习模型来解决心律失常的识别和分类问题。以下是我们使用的六种主要模型:

- KNN (K-Nearest Neighbors) Classifier: KNN算法通过查找给定数据点最近的'K'个邻居来进行分类。这种方法适用于我们的项目,因为心律失常类型可以根据与已知分类的相似性进行有效识别。

- Logistic Regression: Logistic回归是一种广泛用于二元分类的统计方法。在心律失常的情况下,它特别适用于区分正常和异常心电图。

- Decision Tree Classifier: 决策树通过学习简单的决策规则来进行分类。这对于处理具有大量特征的数据集(如我们的心电图数据)非常有效,因为它帮助揭示了不同特征与心律失常类型之间的关系。

- Linear SVC (Support Vector Classifier): 线性支持向量分类器适用于较大的特征空间,并且在边界清晰的分类问题中表现良好。

- Kernelized SVC: 核化支持向量机允许在高维空间中有效地找到决策边界,非常适合于处理复杂的模式识别问题,如多种类型的心律失常。

- Random Forest Classifier: 随机森林是一种集成学习方法,它结合了多个决策树的预测力。它不仅提高了分类的准确性,还增加了模型的稳健性,特别是在面对大量数据和特征时。

这些模型的选择是基于数据集的特性和心律失常识别的特定需求。我们的目标是通过比较这些不同模型的性能,找到最优的解决方案,以提高诊断的准确性和效率。

项目方法

数据探索(EDA)

项目的第一步是进行详尽的探索性数据分析(EDA)。这个阶段关键在于理解数据集的结构、分布及其潜在的问题。我们深入分析了特征间的相关性、各类别样本的分布、以及可能的数据质量问题。这有助于我们在模型训练之前,识别并解决可能影响模型性能的问题。

数据平衡

心律失常数据集中的类别分布不均衡,这可能导致模型对于某些类别过度拟合。为了解决这个问题,我们采用了几种数据采样技术,如过采样少数类别和欠采样多数类别,以平衡数据集。这样做可以提高模型对不常见心律失常类型的识别能力。

数据降维

考虑到数据集有279个特征,存在较高的维度,我们采用了主成分分析(PCA)来进行数据降维。PCA帮助我们减少数据中的冗余特征,同时保留最重要的信息。这不仅减少了计算复杂性,还提高了模型的泛化能力。

模型训练

在处理完数据后,我们进入了模型训练阶段。使用KNN、Logistic Regression、Decision Tree、Linear SVC、Kernelized SVC和Random Forest等多种机器学习模型,我们对数据集进行了广泛的训练和测试。通过交叉验证和调整模型参数,我们评估了不同模型在心律失常识别上的表现,旨在找到最优的预测模型。

代码实现

详细代码分成两部分进行展示:

1. 数据探索篇(EDA)详细代码

心律失常的分类 : 数据探索篇

本项目中使用的数据集由分布在 16 个类别的 452 个不同示例组成。在 452 个例子中,245 个是“正常”人。我们还有 12 种不同类型的心律失常。在所有这些类型的心律失常中,最具代表性的是“冠状动脉疾病”和“Rjgbt 束支传导阻滞”。

- 我们有279个特征,包括患者的年龄、性别、体重、身高以及心电图的相关信息。我们明确观察到,与可用示例的数量相比,特征的数量相对较多。

1. 数据争论¶

导入必要的库¶

import pandas as pd

import numpy as np

import scipy as sp

import math as mt

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

from sklearn.impute import SimpleImputer

数据读取¶

首先读取数据文件并创建一个数据框来放置我们将运行的所有模型的输出。这样做的目的是为了方便我们比较模型。

#读取csv文件

df=pd.read_csv("arrhythmia.csv",header=None)

一.查看数据集的前五行

df.head()

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 270 | 271 | 272 | 273 | 274 | 275 | 276 | 277 | 278 | 279 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 75 | 0 | 190 | 80 | 91 | 193 | 371 | 174 | 121 | -16 | ... | 0.0 | 9.0 | -0.9 | 0.0 | 0.0 | 0.9 | 2.9 | 23.3 | 49.4 | 8 |

| 1 | 56 | 1 | 165 | 64 | 81 | 174 | 401 | 149 | 39 | 25 | ... | 0.0 | 8.5 | 0.0 | 0.0 | 0.0 | 0.2 | 2.1 | 20.4 | 38.8 | 6 |

| 2 | 54 | 0 | 172 | 95 | 138 | 163 | 386 | 185 | 102 | 96 | ... | 0.0 | 9.5 | -2.4 | 0.0 | 0.0 | 0.3 | 3.4 | 12.3 | 49.0 | 10 |

| 3 | 55 | 0 | 175 | 94 | 100 | 202 | 380 | 179 | 143 | 28 | ... | 0.0 | 12.2 | -2.2 | 0.0 | 0.0 | 0.4 | 2.6 | 34.6 | 61.6 | 1 |

| 4 | 75 | 0 | 190 | 80 | 88 | 181 | 360 | 177 | 103 | -16 | ... | 0.0 | 13.1 | -3.6 | 0.0 | 0.0 | -0.1 | 3.9 | 25.4 | 62.8 | 7 |

5 rows × 280 columns

二.查看数据集的最后五行

df.tail()

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 270 | 271 | 272 | 273 | 274 | 275 | 276 | 277 | 278 | 279 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 447 | 53 | 1 | 160 | 70 | 80 | 199 | 382 | 154 | 117 | -37 | ... | 0.0 | 4.3 | -5.0 | 0.0 | 0.0 | 0.7 | 0.6 | -4.4 | -0.5 | 1 |

| 448 | 37 | 0 | 190 | 85 | 100 | 137 | 361 | 201 | 73 | 86 | ... | 0.0 | 15.6 | -1.6 | 0.0 | 0.0 | 0.4 | 2.4 | 38.0 | 62.4 | 10 |

| 449 | 36 | 0 | 166 | 68 | 108 | 176 | 365 | 194 | 116 | -85 | ... | 0.0 | 16.3 | -28.6 | 0.0 | 0.0 | 1.5 | 1.0 | -44.2 | -33.2 | 2 |

| 450 | 32 | 1 | 155 | 55 | 93 | 106 | 386 | 218 | 63 | 54 | ... | -0.4 | 12.0 | -0.7 | 0.0 | 0.0 | 0.5 | 2.4 | 25.0 | 46.6 | 1 |

| 451 | 78 | 1 | 160 | 70 | 79 | 127 | 364 | 138 | 78 | 28 | ... | 0.0 | 10.4 | -1.8 | 0.0 | 0.0 | 0.5 | 1.6 | 21.3 | 32.8 | 1 |

5 rows × 280 columns

三。数据框的基本描述

#数据集维度。

df.shape

(452, 280)

#数据框的简明摘要。

df.info()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 452 entries, 0 to 451 Columns: 280 entries, 0 to 279 dtypes: float64(120), int64(155), object(5) memory usage: 988.9+ KB

#数据帧的描述性统计。

df.describe().T

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| 0 | 452.0 | 46.471239 | 16.466631 | 0.0 | 36.00 | 47.00 | 58.000 | 83.0 |

| 1 | 452.0 | 0.550885 | 0.497955 | 0.0 | 0.00 | 1.00 | 1.000 | 1.0 |

| 2 | 452.0 | 166.188053 | 37.170340 | 105.0 | 160.00 | 164.00 | 170.000 | 780.0 |

| 3 | 452.0 | 68.170354 | 16.590803 | 6.0 | 59.00 | 68.00 | 79.000 | 176.0 |

| 4 | 452.0 | 88.920354 | 15.364394 | 55.0 | 80.00 | 86.00 | 94.000 | 188.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 275 | 452.0 | 0.514823 | 0.347531 | -0.8 | 0.40 | 0.50 | 0.700 | 2.4 |

| 276 | 452.0 | 1.222345 | 1.426052 | -6.0 | 0.50 | 1.35 | 2.100 | 6.0 |

| 277 | 452.0 | 19.326106 | 13.503922 | -44.2 | 11.45 | 18.10 | 25.825 | 88.8 |

| 278 | 452.0 | 29.473230 | 18.493927 | -38.6 | 17.55 | 27.90 | 41.125 | 115.9 |

| 279 | 452.0 | 3.880531 | 4.407097 | 1.0 | 1.00 | 1.00 | 6.000 | 16.0 |

275 rows × 8 columns

处理缺失值¶

在浏览数据集时,我们观察到在 279 个属性中,有 5 个属性在表单中缺失值的格式为 '?'。我们将遵循的方法是,首先使用nan替换 '?',然后使用 isnull检测空值的数量

检查数据集中的空值

#统计空值总数

pd.isnull(df).sum().sum()

0

#替换?

df = df.replace('?', np.NaN)

#最终统计数据集中空值的总数

nu=pd.isnull(df).sum().sum()

nu

408

可视化缺失数据的分布:

pd.isnull(df).sum().plot()

plt.xlabel('Columns')

plt.ylabel('Total number of null value in each column')

Text(0, 0.5, 'Total number of null value in each column')

#放大

pd.isnull(df).sum()[7:17].plot(kind="pie")

plt.xlabel('Columns')

plt.ylabel('Total number of null value in each column')

Text(0, 0.5, 'Total number of null value in each column')

#可视化缺失值的确切列

pd.isnull(df).sum()[9:16].plot(kind="bar")

plt.xlabel('Columns')

plt.ylabel('Total number of null value in each column')

Text(0, 0.5, 'Total number of null value in each column')

#删除第 13 列,因为它包含许多缺失值。

df.drop(columns = 13, inplace=True)

使用均值策略和missing_values类型进行插补

# 进行复制以避免更改原始数据(插补时)

new_df = df.copy()

# 创建新列来指示将估算的内容

cols_with_missing = (col for col in new_df.columns

if new_df[col].isnull().any())

for col in cols_with_missing:

new_df[col] = new_df[col].isnull()

# 插补

my_imputer = SimpleImputer()

new_df = pd.DataFrame(my_imputer.fit_transform(new_df))

new_df.columns = df.columns

# 估算数据框

new_df.head()

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 270 | 271 | 272 | 273 | 274 | 275 | 276 | 277 | 278 | 279 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 75.0 | 0.0 | 190.0 | 80.0 | 91.0 | 193.0 | 371.0 | 174.0 | 121.0 | -16.0 | ... | 0.0 | 9.0 | -0.9 | 0.0 | 0.0 | 0.9 | 2.9 | 23.3 | 49.4 | 8.0 |

| 1 | 56.0 | 1.0 | 165.0 | 64.0 | 81.0 | 174.0 | 401.0 | 149.0 | 39.0 | 25.0 | ... | 0.0 | 8.5 | 0.0 | 0.0 | 0.0 | 0.2 | 2.1 | 20.4 | 38.8 | 6.0 |

| 2 | 54.0 | 0.0 | 172.0 | 95.0 | 138.0 | 163.0 | 386.0 | 185.0 | 102.0 | 96.0 | ... | 0.0 | 9.5 | -2.4 | 0.0 | 0.0 | 0.3 | 3.4 | 12.3 | 49.0 | 10.0 |

| 3 | 55.0 | 0.0 | 175.0 | 94.0 | 100.0 | 202.0 | 380.0 | 179.0 | 143.0 | 28.0 | ... | 0.0 | 12.2 | -2.2 | 0.0 | 0.0 | 0.4 | 2.6 | 34.6 | 61.6 | 1.0 |

| 4 | 75.0 | 0.0 | 190.0 | 80.0 | 88.0 | 181.0 | 360.0 | 177.0 | 103.0 | -16.0 | ... | 0.0 | 13.1 | -3.6 | 0.0 | 0.0 | -0.1 | 3.9 | 25.4 | 62.8 | 7.0 |

5 rows × 279 columns

# 具有零 null 值的数据集。

pd.isnull(new_df).sum().sum()

0

生成最终数据集

#创建列名

final_df_columns=["Age","Sex","Height","Weight","QRS_Dur",

"P-R_Int","Q-T_Int","T_Int","P_Int","QRS","T","P","J","Heart_Rate",

"Q_Wave","R_Wave","S_Wave","R'_Wave","S'_Wave","Int_Def","Rag_R_Nom",

"Diph_R_Nom","Rag_P_Nom","Diph_P_Nom","Rag_T_Nom","Diph_T_Nom",

"DII00", "DII01","DII02", "DII03", "DII04","DII05","DII06","DII07","DII08","DII09","DII10","DII11",

"DIII00","DIII01","DIII02", "DIII03", "DIII04","DIII05","DIII06","DIII07","DIII08","DIII09","DIII10","DIII11",

"AVR00","AVR01","AVR02","AVR03","AVR04","AVR05","AVR06","AVR07","AVR08","AVR09","AVR10","AVR11",

"AVL00","AVL01","AVL02","AVL03","AVL04","AVL05","AVL06","AVL07","AVL08","AVL09","AVL10","AVL11",

"AVF00","AVF01","AVF02","AVF03","AVF04","AVF05","AVF06","AVF07","AVF08","AVF09","AVF10","AVF11",

"V100","V101","V102","V103","V104","V105","V106","V107","V108","V109","V110","V111",

"V200","V201","V202","V203","V204","V205","V206","V207","V208","V209","V210","V211",

"V300","V301","V302","V303","V304","V305","V306","V307","V308","V309","V310","V311",

"V400","V401","V402","V403","V404","V405","V406","V407","V408","V409","V410","V411",

"V500","V501","V502","V503","V504","V505","V506","V507","V508","V509","V510","V511",

"V600","V601","V602","V603","V604","V605","V606","V607","V608","V609","V610","V611",

"JJ_Wave","Amp_Q_Wave","Amp_R_Wave","Amp_S_Wave","R_Prime_Wave","S_Prime_Wave","P_Wave","T_Wave",

"QRSA","QRSTA","DII170","DII171","DII172","DII173","DII174","DII175","DII176","DII177","DII178","DII179",

"DIII180","DIII181","DIII182","DIII183","DIII184","DIII185","DIII186","DIII187","DIII188","DIII189",

"AVR190","AVR191","AVR192","AVR193","AVR194","AVR195","AVR196","AVR197","AVR198","AVR199",

"AVL200","AVL201","AVL202","AVL203","AVL204","AVL205","AVL206","AVL207","AVL208","AVL209",

"AVF210","AVF211","AVF212","AVF213","AVF214","AVF215","AVF216","AVF217","AVF218","AVF219",

"V1220","V1221","V1222","V1223","V1224","V1225","V1226","V1227","V1228","V1229",

"V2230","V2231","V2232","V2233","V2234","V2235","V2236","V2237","V2238","V2239",

"V3240","V3241","V3242","V3243","V3244","V3245","V3246","V3247","V3248","V3249",

"V4250","V4251","V4252","V4253","V4254","V4255","V4256","V4257","V4258","V4259",

"V5260","V5261","V5262","V5263","V5264","V5265","V5266","V5267","V5268","V5269",

"V6270","V6271","V6272","V6273","V6274","V6275","V6276","V6277","V6278","V6279","class"]

#将列名称添加到数据集

new_df.columns=final_df_columns

new_df.to_csv("new data with target class.csv")

new_df.head()

| Age | Sex | Height | Weight | QRS_Dur | P-R_Int | Q-T_Int | T_Int | P_Int | QRS | ... | V6271 | V6272 | V6273 | V6274 | V6275 | V6276 | V6277 | V6278 | V6279 | class | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 75.0 | 0.0 | 190.0 | 80.0 | 91.0 | 193.0 | 371.0 | 174.0 | 121.0 | -16.0 | ... | 0.0 | 9.0 | -0.9 | 0.0 | 0.0 | 0.9 | 2.9 | 23.3 | 49.4 | 8.0 |

| 1 | 56.0 | 1.0 | 165.0 | 64.0 | 81.0 | 174.0 | 401.0 | 149.0 | 39.0 | 25.0 | ... | 0.0 | 8.5 | 0.0 | 0.0 | 0.0 | 0.2 | 2.1 | 20.4 | 38.8 | 6.0 |

| 2 | 54.0 | 0.0 | 172.0 | 95.0 | 138.0 | 163.0 | 386.0 | 185.0 | 102.0 | 96.0 | ... | 0.0 | 9.5 | -2.4 | 0.0 | 0.0 | 0.3 | 3.4 | 12.3 | 49.0 | 10.0 |

| 3 | 55.0 | 0.0 | 175.0 | 94.0 | 100.0 | 202.0 | 380.0 | 179.0 | 143.0 | 28.0 | ... | 0.0 | 12.2 | -2.2 | 0.0 | 0.0 | 0.4 | 2.6 | 34.6 | 61.6 | 1.0 |

| 4 | 75.0 | 0.0 | 190.0 | 80.0 | 88.0 | 181.0 | 360.0 | 177.0 | 103.0 | -16.0 | ... | 0.0 | 13.1 | -3.6 | 0.0 | 0.0 | -0.1 | 3.9 | 25.4 | 62.8 | 7.0 |

5 rows × 279 columns

# 删除目标属性以创建最终数据帧。

final_df = new_df.drop(columns ="class")

final_df.to_csv("FInal Dataset with dropped class Attribute.csv")

final_df.head()

| Age | Sex | Height | Weight | QRS_Dur | P-R_Int | Q-T_Int | T_Int | P_Int | QRS | ... | V6270 | V6271 | V6272 | V6273 | V6274 | V6275 | V6276 | V6277 | V6278 | V6279 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 75.0 | 0.0 | 190.0 | 80.0 | 91.0 | 193.0 | 371.0 | 174.0 | 121.0 | -16.0 | ... | -0.3 | 0.0 | 9.0 | -0.9 | 0.0 | 0.0 | 0.9 | 2.9 | 23.3 | 49.4 |

| 1 | 56.0 | 1.0 | 165.0 | 64.0 | 81.0 | 174.0 | 401.0 | 149.0 | 39.0 | 25.0 | ... | -0.5 | 0.0 | 8.5 | 0.0 | 0.0 | 0.0 | 0.2 | 2.1 | 20.4 | 38.8 |

| 2 | 54.0 | 0.0 | 172.0 | 95.0 | 138.0 | 163.0 | 386.0 | 185.0 | 102.0 | 96.0 | ... | 0.9 | 0.0 | 9.5 | -2.4 | 0.0 | 0.0 | 0.3 | 3.4 | 12.3 | 49.0 |

| 3 | 55.0 | 0.0 | 175.0 | 94.0 | 100.0 | 202.0 | 380.0 | 179.0 | 143.0 | 28.0 | ... | 0.1 | 0.0 | 12.2 | -2.2 | 0.0 | 0.0 | 0.4 | 2.6 | 34.6 | 61.6 |

| 4 | 75.0 | 0.0 | 190.0 | 80.0 | 88.0 | 181.0 | 360.0 | 177.0 | 103.0 | -16.0 | ... | -0.4 | 0.0 | 13.1 | -3.6 | 0.0 | 0.0 | -0.1 | 3.9 | 25.4 | 62.8 |

5 rows × 278 columns

pd.isnull(final_df).sum().sum()

0

2.探索性数据分析(EDA)¶

列出所有 16 个类名称的列表。

#带有类名的列表

class_names = ["Normal",

"Ischemic changes (CAD)",

"Old Anterior Myocardial Infraction",

"Old Inferior Myocardial Infraction",

"Sinus tachycardy",

"Sinus bradycardy",

"Ventricular Premature Contraction (PVC)",

"Supraventricular Premature Contraction",

"Left Boundle branch block",

"Right boundle branch block",

"1.Degree AtrioVentricular block",

"2.Degree AV block",

"3.Degree AV block",

"Left Ventricule hypertrophy",

"Atrial Fibrillation or Flutter",

"Others"]

分析数据集并检查每个类别有多少个示例:¶

# 仅检查属性的数据类型

final_df[["Age","Heart_Rate"]].dtypes

Age float64 Heart_Rate float64 dtype: object

我们需要根据类属性对数据集进行排序,以计算每个类可用的实例数量

#根据类属性进行排序。

t=new_df.sort_values(by=["class"])

# 计算每个类的实例数量

la = t["class"].value_counts(sort=False).tolist()

la

[245, 44, 15, 15, 13, 25, 3, 2, 9, 50, 4, 5, 22]

#关于类的数据集可视化

labels = class_names

values = la[0:10]

values.extend([0,0,0])

values.extend(la[10:13])

print(values)

#我们用以 10 为底的对数对数据进行标准化,以便能够“以合适的方式绘制它们”

Log_Norm = []

for i in values:

Log_Norm.append(mt.log10(i+1))

fig1, ax1 = plt.subplots(figsize=(16,9))

patches = plt.pie(Log_Norm, autopct='%1.1f%%', startangle=90)

leg = plt.legend( loc = 'best', labels=['%s, %1.1f %%' % (l, s) for l, s in zip(labels, Log_Norm)])

plt.axis('equal')

for text in leg.get_texts():

plt.setp(text, color = 'Black')

plt.tight_layout()

plt.show()

[245, 44, 15, 15, 13, 25, 3, 2, 9, 50, 0, 0, 0, 4, 5, 22]

fdict = {}

j = 0

for i in labels:

fdict[i] = Log_Norm[j]

j+=1

fdf = pd.DataFrame(fdict,index=[0])

fdf = fdf.rename(index={0: ''})

fig, ax = plt.subplots(figsize=(15,10))

fdf.plot(kind="bar",ax=ax)

ax.set_title("(Log)-Histogram of the Classes Distributions ")

ax.set_ylabel('Number of examples')

ax.set_xlabel('Classes')

fig.tight_layout()

plt.show()

#分组描述

import scipy.stats as sp

grouped_mean = new_df.groupby(["class"]).mean()

grouped_median = new_df.groupby(["class"]).median()

grouped_mode = new_df.groupby(["class"]).agg(lambda x: sp.mode(x, axis=None, keepdims=True)[0])

gouped_std = new_df.groupby(['class']).std()

grouped_median.head()

| Age | Sex | Height | Weight | QRS_Dur | P-R_Int | Q-T_Int | T_Int | P_Int | QRS | ... | V6270 | V6271 | V6272 | V6273 | V6274 | V6275 | V6276 | V6277 | V6278 | V6279 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| class | |||||||||||||||||||||

| 1.0 | 46.0 | 1.0 | 163.0 | 68.0 | 84.0 | 156.0 | 367.0 | 160.0 | 91.0 | 41.0 | ... | -0.1 | 0.0 | 9.1 | -0.9 | 0.0 | 0.0 | 0.5 | 1.6 | 19.10 | 31.7 |

| 2.0 | 55.0 | 1.0 | 160.5 | 70.0 | 90.5 | 159.0 | 366.0 | 176.5 | 91.0 | 37.5 | ... | -0.8 | 0.0 | 9.6 | -1.3 | 0.0 | 0.0 | 0.5 | -0.8 | 20.05 | 11.8 |

| 3.0 | 50.0 | 0.0 | 170.0 | 74.0 | 93.0 | 163.0 | 351.0 | 196.0 | 96.0 | 48.0 | ... | -0.2 | 0.0 | 6.3 | -2.0 | 0.0 | 0.0 | 0.8 | 0.6 | 12.30 | 19.3 |

| 4.0 | 57.0 | 0.0 | 165.0 | 76.0 | 93.0 | 157.0 | 357.0 | 161.0 | 95.0 | -2.0 | ... | -0.2 | -0.7 | 6.3 | -0.5 | 0.0 | 0.0 | 0.7 | 0.3 | 10.90 | 12.0 |

| 5.0 | 34.0 | 1.0 | 160.0 | 51.0 | 82.0 | 155.0 | 302.0 | 153.0 | 81.0 | 52.0 | ... | -0.3 | 0.0 | 5.1 | -2.1 | 0.0 | 0.0 | 0.5 | 0.9 | 6.00 | 10.7 |

5 rows × 278 columns

#相关热图

sns.heatmap(new_df[['Age', 'Sex', 'Height', 'Weight','class']].corr(), cmap='CMRmap',center=0)

plt.show()

处理异常值和数据可视化¶

#寻找成对关系和异常值

g = sns.PairGrid(final_df, vars=['Age', 'Sex', 'Height', 'Weight'],

hue='Sex', palette='BrBG')

g.map(plt.scatter, alpha=0.8)

g.add_legend();

根据散点图,“身高”和“体重”属性几乎没有异常值。检查身高和体重的最大值

sorted(final_df['Height'], reverse=True)[:10]

[780.0, 608.0, 190.0, 190.0, 190.0, 188.0, 186.0, 186.0, 186.0, 185.0]

世界上最高的人是 272 厘米(1940 年)。他的追随者是 267 厘米(1905 年)和 263.5 厘米(1969 年)。将 780 和 608 分别替换为 180 和 108 厘米

final_df['Height']=final_df['Height'].replace(608,108)

final_df['Height']=final_df['Height'].replace(780,180)

sorted(final_df['Weight'], reverse=True)[:10]

[176.0, 124.0, 110.0, 106.0, 105.0, 105.0, 104.0, 104.0, 100.0, 98.0]

176 公斤 是可能的重量。所以我们将它们保留在数据框中。

g = sns.PairGrid(final_df, vars=['Age', 'Sex', 'Height', 'Weight'],

hue='Sex', palette='RdBu_r')

g.map(plt.scatter, alpha=0.8)

g.add_legend();

sns.boxplot(data=final_df[["QRS_Dur","P-R_Int","Q-T_Int","T_Int","P_Int"]]);

PR 间期是指从 P 波开始一直延伸到 QRS 波群开始的时间段(以毫秒为单位);它的持续时间通常在 120 到 200 毫秒之间。

final_df['P-R_Int'].value_counts().sort_index().head().plot(kind='bar')

plt.xlabel('P-R Interval Values')

plt.ylabel('Count');

final_df['P-R_Int'].value_counts().sort_index().tail().plot(kind='bar')

plt.xlabel('P-R Interval Values')

plt.ylabel('Count');

PR 间隔数据包括异常值 0(x18)。我会保留它们¶

QT 间期是心脏电周期中 Q 波开始和 T 波结束之间时间的测量。 Q-T 间隔框中出现的异常值数据可能与 T 间隔数据的异常值有关。

final_df['T_Int'].value_counts().sort_index(ascending=False).head().plot(kind='bar')

plt.xlabel('T Interval Values')

plt.ylabel('Count');

final_df['T_Int'].value_counts().sort_index(ascending=False).tail().plot(kind='bar')

plt.xlabel('T Interval Values')

plt.ylabel('Count');

sns.boxplot(data=final_df[["QRS","T","P","J","Heart_Rate"]]);

sns.boxplot(data=final_df[["Q_Wave","R_Wave","S_Wave"]])

sns.swarmplot(data=final_df[["Q_Wave","R_Wave","S_Wave"]]);

d:\anaconda\envs\pytorch2.0\Lib\site-packages\seaborn\categorical.py:3544: UserWarning: 78.8% of the points cannot be placed; you may want to decrease the size of the markers or use stripplot. warnings.warn(msg, UserWarning) d:\anaconda\envs\pytorch2.0\Lib\site-packages\seaborn\categorical.py:3544: UserWarning: 46.0% of the points cannot be placed; you may want to decrease the size of the markers or use stripplot. warnings.warn(msg, UserWarning) d:\anaconda\envs\pytorch2.0\Lib\site-packages\seaborn\categorical.py:3544: UserWarning: 52.4% of the points cannot be placed; you may want to decrease the size of the markers or use stripplot. warnings.warn(msg, UserWarning)

sns.boxplot(data=final_df[["R'_Wave","S'_Wave","Int_Def","Rag_R_Nom"]]);

S'Wave 有 0,不是 NaN。因此,我们不能假设它包含异常值。¶

final_df["R'_Wave"].value_counts().sort_index(ascending=False)

24.0 1 16.0 1 12.0 2 0.0 448 Name: R'_Wave, dtype: int64

final_df["S'_Wave"].value_counts().sort_index(ascending=False)

0.0 452 Name: S'_Wave, dtype: int64

final_df["Rag_R_Nom"].value_counts().sort_index(ascending=False)

1.0 1 0.0 451 Name: Rag_R_Nom, dtype: int64

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["Diph_R_Nom","Rag_P_Nom","Diph_P_Nom","Rag_T_Nom","Diph_T_Nom"]]);

final_df["Diph_R_Nom"].value_counts().sort_index(ascending=False)

1.0 5 0.0 447 Name: Diph_R_Nom, dtype: int64

final_df["Rag_P_Nom"].value_counts().sort_index(ascending=False)

1.0 5 0.0 447 Name: Rag_P_Nom, dtype: int64

final_df["Diph_P_Nom"].value_counts().sort_index(ascending=False)

1.0 2 0.0 450 Name: Diph_P_Nom, dtype: int64

final_df["Rag_T_Nom"].value_counts().sort_index(ascending=False)

1.0 2 0.0 450 Name: Rag_T_Nom, dtype: int64

final_df["Diph_T_Nom"].value_counts().sort_index(ascending=False)

1.0 4 0.0 448 Name: Diph_T_Nom, dtype: int64

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["DII00", "DII01","DII02", "DII03", "DII04","DII05","DII06","DII07","DII08","DII09","DII10","DII11"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["DIII00","DIII01","DIII02", "DIII03", "DIII04","DIII05","DIII06",

"DIII07","DIII08","DIII09","DIII10","DIII11"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["AVR00","AVR01","AVR02","AVR03","AVR04","AVR05",

"AVR06","AVR07","AVR08","AVR09","AVR10","AVR11"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["AVL00","AVL01","AVL02","AVL03","AVL04","AVL05","AVL06","AVL07","AVL08","AVL09","AVL10","AVL11"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["AVF00","AVF01","AVF02","AVF03","AVF04","AVF05","AVF06","AVF07","AVF08","AVF09","AVF10","AVF11"]]);

sns.set(rc={'figure.figsize':(12,6)})

sns.boxplot(data=final_df[["V100","V101","V102","V103","V104","V105","V106","V107","V108","V109","V110","V111"]]);

final_df["V101"].value_counts().sort_index(ascending=False)

216.0 1 112.0 1 84.0 1 72.0 1 68.0 1 64.0 1 48.0 6 44.0 6 40.0 13 36.0 36 32.0 63 28.0 81 24.0 88 20.0 57 16.0 13 12.0 4 0.0 79 Name: V101, dtype: int64

V101 有一个离群值,但是当我们查看其他集合(V201、V301、V501)时,我们可以看到类似地也有一个离群值。由于我们的数据存在严重偏差,我不能说这些异常值应该被删除。

例如,当我们查看数据时,我们可以看到第 8 类(室上性早搏)只有 2 个实例。或者 3类(心室早搏 (PVC))只有 3 个。我们图中出现的异常值可能属于这些实例,需要保留。

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V200","V201","V202","V203","V204","V205","V206","V207","V208","V209","V210","V211"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V300","V301","V302","V303","V304","V305","V306","V307","V308","V309","V310","V311"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V400","V401","V402","V403","V404","V405","V406","V407","V408","V409","V410","V411"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V500","V501","V502","V503","V504","V505","V506","V507","V508","V509","V510","V511"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V600","V601","V602","V603","V604","V605","V606","V607","V608","V609","V610","V611"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["JJ_Wave","Amp_Q_Wave","Amp_R_Wave","Amp_S_Wave","R_Prime_Wave","S_Prime_Wave","P_Wave","T_Wave"]]);

sns.set(rc={'figure.figsize':(13.7,5.27)})

sns.boxplot(data=final_df[["QRSA","QRSTA","DII170","DII171","DII172","DII173","DII174","DII175","DII176","DII177","DII178","DII179"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["DIII180","DIII181","DIII182","DIII183","DIII184","DIII185","DIII186","DIII187","DIII188","DIII189"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["AVR190","AVR191","AVR192","AVR193","AVR194","AVR195","AVR196","AVR197","AVR198","AVR199"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["AVL200","AVL201","AVL202","AVL203","AVL204","AVL205","AVL206","AVL207","AVL208","AVL209"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["AVF210","AVF211","AVF212","AVF213","AVF214","AVF215","AVF216","AVF217","AVF218","AVF219"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V1220","V1221","V1222","V1223","V1224","V1225","V1226","V1227","V1228","V1229"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V2230","V2231","V2232","V2233","V2234","V2235","V2236","V2237","V2238","V2239"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V3240","V3241","V3242","V3243","V3244","V3245","V3246","V3247","V3248","V3249"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V4250","V4251","V4252","V4253","V4254","V4255","V4256","V4257","V4258","V4259"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V5260","V5261","V5262","V5263","V5264","V5265","V5266","V5267","V5268","V5269"]]);

sns.set(rc={'figure.figsize':(11.7,5.27)})

sns.boxplot(data=final_df[["V6270","V6271","V6272","V6273","V6274","V6275","V6276","V6277","V6278","V6279"]]);

class_list = []

j = 1

new_class_names =[]

for i in class_names:

if(i != "1.Degree AtrioVentricular block" and i!= '3.Degree AV block' and i!= "2.Degree AV block" ):

class_list.append([j,i,grouped_mean["Age"][j],grouped_median["Age"][j],grouped_mode["Age"][j],gouped_std["Age"][j]])

new_class_names.append(i)

j+=1

AgeClass = {

"AgeAVG": grouped_mean["Age"],"AgeMdian":grouped_median["Age"],

"AgeMode": grouped_mode["Age"],"AgeStd":gouped_std['Age'],"Class":new_class_names }

AgeClassDF =pd.DataFrame(AgeClass)

fig, ax = plt.subplots(figsize=(15,10))

AgeClassDF.plot(kind="bar",ax=ax)

ax.set_title("Plot of the Age statistics")

ax.set_ylabel('Values of Mean, Median, Mode and Standard Deviation')

ax.set_xlabel('Classes')

fig.tight_layout()

plt.show()

AgeClassDF

| AgeAVG | AgeMdian | AgeMode | AgeStd | Class | |

|---|---|---|---|---|---|

| class | |||||

| 1.0 | 46.273469 | 46.0 | 0.0 | 14.556092 | Normal |

| 2.0 | 51.750000 | 55.0 | 0.0 | 14.160418 | Ischemic changes (CAD) |

| 3.0 | 53.333333 | 50.0 | 0.0 | 11.286317 | Old Anterior Myocardial Infraction |

| 4.0 | 57.266667 | 57.0 | 0.0 | 11.895177 | Old Inferior Myocardial Infraction |

| 5.0 | 30.846154 | 34.0 | 0.0 | 27.904140 | Sinus tachycardy |

| 6.0 | 47.920000 | 46.0 | 0.0 | 13.165359 | Sinus bradycardy |

| 7.0 | 60.666667 | 67.0 | 0.0 | 18.339393 | Ventricular Premature Contraction (PVC) |

| 8.0 | 71.000000 | 71.0 | 0.0 | 5.656854 | Supraventricular Premature Contraction |

| 9.0 | 58.222222 | 66.0 | 0.0 | 21.211894 | Left Boundle branch block |

| 10.0 | 38.920000 | 38.5 | 0.0 | 17.692198 | Right boundle branch block |

| 14.0 | 19.500000 | 17.5 | 0.0 | 11.090537 | Left Ventricule hypertrophy |

| 15.0 | 57.400000 | 58.0 | 0.0 | 6.188699 | Atrial Fibrillation or Flutter |

| 16.0 | 44.272727 | 43.5 | 0.0 | 19.644900 | Others |

了解人们的性别是否影响他们的状况¶

男性和女性基本统计

class_list = []

j = 1

new_class_names =[]

for i in class_names:

if(i != "1.Degree AtrioVentricular block" and i!= '3.Degree AV block' and i!= "2.Degree AV block" ):

class_list.append([j,i,grouped_mean["Sex"][j],grouped_median["Sex"][j],grouped_mode["Sex"][j],gouped_std["Sex"][j]])

new_class_names.append(i)

j+=1

SexClass = {

"SexAVG": grouped_mean["Sex"],"SexMdian":grouped_median["Sex"],

"SexMode": grouped_mode["Sex"],"SexStd":gouped_std['Sex'],"Class":new_class_names }

SexClassDF =pd.DataFrame(SexClass)

fig, ax = plt.subplots(figsize=(15,10))

SexClassDF.plot(kind="bar",ax=ax)

ax.set_title("Plot of the Sex statistics")

ax.set_ylabel('Values of Mean, Median, Mode and Standard Deviation')

ax.set_xlabel('Classes')

fig.tight_layout()

plt.show()

SexClassDF

| SexAVG | SexMdian | SexMode | SexStd | Class | |

|---|---|---|---|---|---|

| class | |||||

| 1.0 | 0.653061 | 1.0 | 0.0 | 0.476970 | Normal |

| 2.0 | 0.590909 | 1.0 | 0.0 | 0.497350 | Ischemic changes (CAD) |

| 3.0 | 0.000000 | 0.0 | 0.0 | 0.000000 | Old Anterior Myocardial Infraction |

| 4.0 | 0.266667 | 0.0 | 0.0 | 0.457738 | Old Inferior Myocardial Infraction |

| 5.0 | 0.692308 | 1.0 | 0.0 | 0.480384 | Sinus tachycardy |

| 6.0 | 0.440000 | 0.0 | 0.0 | 0.506623 | Sinus bradycardy |

| 7.0 | 0.000000 | 0.0 | 0.0 | 0.000000 | Ventricular Premature Contraction (PVC) |

| 8.0 | 0.000000 | 0.0 | 0.0 | 0.000000 | Supraventricular Premature Contraction |

| 9.0 | 0.555556 | 1.0 | 0.0 | 0.527046 | Left Boundle branch block |

| 10.0 | 0.440000 | 0.0 | 0.0 | 0.501427 | Right boundle branch block |

| 14.0 | 0.250000 | 0.0 | 0.0 | 0.500000 | Left Ventricule hypertrophy |

| 15.0 | 0.800000 | 1.0 | 0.0 | 0.447214 | Atrial Fibrillation or Flutter |

| 16.0 | 0.318182 | 0.0 | 0.0 | 0.476731 | Others |

了解患者的体重是否影响患者的病情¶

class_list = []

j = 1

new_class_names =[]

for i in class_names:

if(i != "1.Degree AtrioVentricular block" and i!= '3.Degree AV block' and i!= "2.Degree AV block" ):

class_list.append([j,i,grouped_mean["Weight"][j],grouped_median["Weight"][j],grouped_mode["Weight"][j],gouped_std["Weight"][j]])

new_class_names.append(i)

j+=1

WeightClass = {

"WeightAVG": grouped_mean["Weight"],"WeightMdian":grouped_median["Weight"],

"WeightMode": grouped_mode["Weight"],"WeightStd":gouped_std['Weight'],"Class":new_class_names }

WeightClassDF =pd.DataFrame(WeightClass)

fig, ax = plt.subplots(figsize=(15,10))

WeightClassDF.plot(kind="bar",ax=ax)

ax.set_title("Plot of the Weights statistics")

ax.set_ylabel('Values of Mean, Median, Mode and Standard Deviation')

ax.set_xlabel('Classes')

fig.tight_layout()

plt.show()

WeightClassDF

| WeightAVG | WeightMdian | WeightMode | WeightStd | Class | |

|---|---|---|---|---|---|

| class | |||||

| 1.0 | 68.669388 | 68.0 | 0.0 | 14.454595 | Normal |

| 2.0 | 73.363636 | 70.0 | 0.0 | 20.617323 | Ischemic changes (CAD) |

| 3.0 | 76.866667 | 74.0 | 0.0 | 12.397388 | Old Anterior Myocardial Infraction |

| 4.0 | 73.866667 | 76.0 | 0.0 | 9.318696 | Old Inferior Myocardial Infraction |

| 5.0 | 44.615385 | 51.0 | 0.0 | 29.259011 | Sinus tachycardy |

| 6.0 | 68.000000 | 66.0 | 0.0 | 11.045361 | Sinus bradycardy |

| 7.0 | 73.000000 | 74.0 | 0.0 | 7.549834 | Ventricular Premature Contraction (PVC) |

| 8.0 | 79.000000 | 79.0 | 0.0 | 1.414214 | Supraventricular Premature Contraction |

| 9.0 | 66.444444 | 72.0 | 0.0 | 18.808981 | Left Boundle branch block |

| 10.0 | 63.300000 | 63.5 | 0.0 | 16.579124 | Right boundle branch block |

| 14.0 | 50.250000 | 51.5 | 0.0 | 19.653244 | Left Ventricule hypertrophy |

| 15.0 | 76.000000 | 78.0 | 0.0 | 13.209845 | Atrial Fibrillation or Flutter |

| 16.0 | 68.136364 | 70.5 | 0.0 | 18.061523 | Others |

了解人们的身高是否影响他们的状况¶

class_list = []

j = 1

new_class_names =[]

for i in class_names:

if(i != "1.Degree AtrioVentricular block" and i!= '3.Degree AV block' and i!= "2.Degree AV block" ):

class_list.append([j,i,grouped_mean["Height"][j],grouped_median["Height"][j],grouped_mode["Height"][j],gouped_std["Height"][j]])

new_class_names.append(i)

j+=1

HeightClass = {

"HeightAVG": grouped_mean["Height"],"HeightMdian":grouped_median["Height"],

"HeightMode": grouped_mode["Height"],"HeightStd":gouped_std['Height'],"Class":new_class_names }

HeightClassDF =pd.DataFrame(HeightClass)

fig, ax = plt.subplots(figsize=(15,10))

HeightClassDF.plot(kind="bar",ax=ax)

ax.set_title("Plot of the Heights statistics")

ax.set_ylabel('Values of Mean, Median, Mode and Standard Deviation')

ax.set_xlabel('Classes')

fig.tight_layout()

plt.show()

HeightClassDF

| HeightAVG | HeightMdian | HeightMode | HeightStd | Class | |

|---|---|---|---|---|---|

| class | |||||

| 1.0 | 164.102041 | 163.0 | 0.0 | 8.048126 | Normal |

| 2.0 | 163.068182 | 160.5 | 0.0 | 7.456534 | Ischemic changes (CAD) |

| 3.0 | 170.600000 | 170.0 | 0.0 | 4.579457 | Old Anterior Myocardial Infraction |

| 4.0 | 166.000000 | 165.0 | 0.0 | 7.973169 | Old Inferior Myocardial Infraction |

| 5.0 | 233.615385 | 160.0 | 0.0 | 208.500015 | Sinus tachycardy |

| 6.0 | 165.040000 | 165.0 | 0.0 | 6.996904 | Sinus bradycardy |

| 7.0 | 178.000000 | 176.0 | 0.0 | 11.135529 | Ventricular Premature Contraction (PVC) |

| 8.0 | 176.500000 | 176.5 | 0.0 | 19.091883 | Supraventricular Premature Contraction |

| 9.0 | 155.666667 | 158.0 | 0.0 | 16.209565 | Left Boundle branch block |

| 10.0 | 163.520000 | 165.0 | 0.0 | 13.699754 | Right boundle branch block |

| 14.0 | 156.000000 | 167.0 | 0.0 | 24.097026 | Left Ventricule hypertrophy |

| 15.0 | 161.400000 | 160.0 | 0.0 | 4.098780 | Atrial Fibrillation or Flutter |

| 16.0 | 165.000000 | 168.0 | 0.0 | 13.288018 | Others |

了解 QRS 持续时间是否影响人体状况¶

class_list = []

j = 1

new_class_names =[]

for i in class_names:

if(i != "1.Degree AtrioVentricular block" and i!= '3.Degree AV block' and i!= "2.Degree AV block" ):

class_list.append([j,i,grouped_mean["QRS_Dur"][j],grouped_median["QRS_Dur"][j],grouped_mode["QRS_Dur"][j],gouped_std["QRS_Dur"][j]])

new_class_names.append(i)

j+=1

QRSTClass = {

"QRSDAVG": grouped_mean["QRS_Dur"],"QRSDMdian":grouped_median["QRS_Dur"],

"QRSDMode": grouped_mode["QRS_Dur"],"QRSDStd":gouped_std['QRS_Dur'],"Class":new_class_names }

QRSTClassDF =pd.DataFrame(QRSTClass)

fig, ax = plt.subplots(figsize=(15,10))

QRSTClassDF.plot(kind="bar",ax=ax)

ax.set_title("Plot of the QRS Duration statistics")

ax.set_ylabel('Values of Mean, Median, Mode and Standard Deviation')

ax.set_xlabel('Classes')

fig.tight_layout()

plt.show()

QRSTClassDF

| QRSDAVG | QRSDMdian | QRSDMode | QRSDStd | Class | |

|---|---|---|---|---|---|

| class | |||||

| 1.0 | 84.277551 | 84.0 | 0.0 | 8.735439 | Normal |

| 2.0 | 89.818182 | 90.5 | 0.0 | 10.207778 | Ischemic changes (CAD) |

| 3.0 | 93.333333 | 93.0 | 0.0 | 9.131317 | Old Anterior Myocardial Infraction |

| 4.0 | 89.866667 | 93.0 | 0.0 | 10.979635 | Old Inferior Myocardial Infraction |

| 5.0 | 88.000000 | 82.0 | 0.0 | 29.796532 | Sinus tachycardy |

| 6.0 | 83.160000 | 83.0 | 0.0 | 8.561931 | Sinus bradycardy |

| 7.0 | 96.333333 | 92.0 | 0.0 | 11.150486 | Ventricular Premature Contraction (PVC) |

| 8.0 | 98.500000 | 98.5 | 0.0 | 10.606602 | Supraventricular Premature Contraction |

| 9.0 | 154.444444 | 147.0 | 0.0 | 16.409685 | Left Boundle branch block |

| 10.0 | 97.580000 | 95.0 | 0.0 | 15.129495 | Right boundle branch block |

| 14.0 | 99.250000 | 97.5 | 0.0 | 6.396614 | Left Ventricule hypertrophy |

| 15.0 | 88.400000 | 82.0 | 0.0 | 19.034180 | Atrial Fibrillation or Flutter |

| 16.0 | 92.136364 | 94.0 | 0.0 | 11.825298 | Others |

sns.jointplot(x="Sex", y="Age", data=final_df, kind='kde')

<seaborn.axisgrid.JointGrid at 0x1cc0210b050>

sns.jointplot(x="Sex", y="Weight", data=final_df, kind='resid')

<seaborn.axisgrid.JointGrid at 0x1cc0ed8b350>

sns.jointplot(x="Age", y="Weight", data=final_df, kind='scatter')

<seaborn.axisgrid.JointGrid at 0x1cc0ffbe1d0>

sns.jointplot(x="QRS", y="Age", data=final_df, kind='reg',color="green")

<seaborn.axisgrid.JointGrid at 0x1cc10398b90>

sns.jointplot(x="QRS", y="Sex", data=final_df, kind='reg',color="navy")

<seaborn.axisgrid.JointGrid at 0x1cc10719ad0>

sns.jointplot(x="QRS", y="Weight", data=final_df, kind='reg',color="brown")

<seaborn.axisgrid.JointGrid at 0x1cc10cdd190>

g = sns.JointGrid(x="Age", y="Weight", data=final_df)

g.plot_joint(sns.regplot, order=2)

g.plot_marginals(sns.histplot,color="red")

<seaborn.axisgrid.JointGrid at 0x1cc11dad190>

g = sns.JointGrid(x="Weight", y="QRS", data=final_df)

g.plot_joint(sns.regplot, order=2)

g.plot_marginals(sns.histplot)

<seaborn.axisgrid.JointGrid at 0x1cc11844d90>

g = sns.JointGrid(x="Age", y="QRS", data=final_df)

g.plot_joint(sns.regplot, order=2)

g.plot_marginals(sns.histplot)

<seaborn.axisgrid.JointGrid at 0x1cc1267c110>

2. 模型训练篇详细代码

心律失常的分类 : 模型训练篇

该项目中使用的数据集可在 UCI 机器学习存储库中找到。

- 它由分布在 16 个类别的 452 个不同示例组成。在 452 个例子中,245 个是“正常”人。我们还有 12 种不同类型的心律失常。在所有这些类型的心律失常中,最具代表性的是“冠状动脉疾病”和“Rjgbt 束支传导阻滞”。

- 我们有279个特征,包括患者的年龄、性别、体重、身高以及心电图的相关信息。我们明确地观察到,与可用的示例数量相比,特征数量相对较多。

- 我们的目标是预测一个人是否患有心律失常,如果有类别,则将其分为 12 个可用组之一。

导入必要的库¶

import pandas as pd

import numpy as np

import scipy as sp

import math as mt

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

from sklearn.impute import SimpleImputer

1. 数据预处理¶

检查数据集中的空值

df=pd.read_csv("arrhythmia.csv",header=None)

df = df.replace('?', np.NaN)

第 13 列包含总共 452 个实例中的 350 多个缺失值。因此我们将删除第 13 列。其他属性的空值相对较少。因此,我们不会删除其他属性,而是将其平均值替换为空值。

#删除第13列

df.drop(columns = 13, inplace=True)

使用均值插补策略

# 进行复制以避免更改原始数据(插补时)

new_df = df.copy()

# 创建新列来指示将估算的内容

cols_with_missing = (col for col in new_df.columns if new_df[col].isnull().any())

for col in cols_with_missing:

new_df[col] = new_df[col].isnull()

# 插补

# my_imputer = SimpleImputer(missing_values=np.nan, Strategy='mean')

my_imputer = SimpleImputer()

new_df = pd.DataFrame(my_imputer.fit_transform(new_df))

new_df.columns = df.columns

# 估算数据框

new_df.head()

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 270 | 271 | 272 | 273 | 274 | 275 | 276 | 277 | 278 | 279 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 75.0 | 0.0 | 190.0 | 80.0 | 91.0 | 193.0 | 371.0 | 174.0 | 121.0 | -16.0 | ... | 0.0 | 9.0 | -0.9 | 0.0 | 0.0 | 0.9 | 2.9 | 23.3 | 49.4 | 8.0 |

| 1 | 56.0 | 1.0 | 165.0 | 64.0 | 81.0 | 174.0 | 401.0 | 149.0 | 39.0 | 25.0 | ... | 0.0 | 8.5 | 0.0 | 0.0 | 0.0 | 0.2 | 2.1 | 20.4 | 38.8 | 6.0 |

| 2 | 54.0 | 0.0 | 172.0 | 95.0 | 138.0 | 163.0 | 386.0 | 185.0 | 102.0 | 96.0 | ... | 0.0 | 9.5 | -2.4 | 0.0 | 0.0 | 0.3 | 3.4 | 12.3 | 49.0 | 10.0 |

| 3 | 55.0 | 0.0 | 175.0 | 94.0 | 100.0 | 202.0 | 380.0 | 179.0 | 143.0 | 28.0 | ... | 0.0 | 12.2 | -2.2 | 0.0 | 0.0 | 0.4 | 2.6 | 34.6 | 61.6 | 1.0 |

| 4 | 75.0 | 0.0 | 190.0 | 80.0 | 88.0 | 181.0 | 360.0 | 177.0 | 103.0 | -16.0 | ... | 0.0 | 13.1 | -3.6 | 0.0 | 0.0 | -0.1 | 3.9 | 25.4 | 62.8 | 7.0 |

5 rows × 279 columns

# 具有零 null 值的数据集。

pd.isnull(new_df).sum().sum()

0

生成最终数据集

#创建列名

final_df_columns=["Age","Sex","Height","Weight","QRS_Dur",

"P-R_Int","Q-T_Int","T_Int","P_Int","QRS","T","P","J","Heart_Rate",

"Q_Wave","R_Wave","S_Wave","R'_Wave","S'_Wave","Int_Def","Rag_R_Nom",

"Diph_R_Nom","Rag_P_Nom","Diph_P_Nom","Rag_T_Nom","Diph_T_Nom",

"DII00", "DII01","DII02", "DII03", "DII04","DII05","DII06","DII07","DII08","DII09","DII10","DII11",

"DIII00","DIII01","DIII02", "DIII03", "DIII04","DIII05","DIII06","DIII07","DIII08","DIII09","DIII10","DIII11",

"AVR00","AVR01","AVR02","AVR03","AVR04","AVR05","AVR06","AVR07","AVR08","AVR09","AVR10","AVR11",

"AVL00","AVL01","AVL02","AVL03","AVL04","AVL05","AVL06","AVL07","AVL08","AVL09","AVL10","AVL11",

"AVF00","AVF01","AVF02","AVF03","AVF04","AVF05","AVF06","AVF07","AVF08","AVF09","AVF10","AVF11",

"V100","V101","V102","V103","V104","V105","V106","V107","V108","V109","V110","V111",

"V200","V201","V202","V203","V204","V205","V206","V207","V208","V209","V210","V211",

"V300","V301","V302","V303","V304","V305","V306","V307","V308","V309","V310","V311",

"V400","V401","V402","V403","V404","V405","V406","V407","V408","V409","V410","V411",

"V500","V501","V502","V503","V504","V505","V506","V507","V508","V509","V510","V511",

"V600","V601","V602","V603","V604","V605","V606","V607","V608","V609","V610","V611",

"JJ_Wave","Amp_Q_Wave","Amp_R_Wave","Amp_S_Wave","R_Prime_Wave","S_Prime_Wave","P_Wave","T_Wave",

"QRSA","QRSTA","DII170","DII171","DII172","DII173","DII174","DII175","DII176","DII177","DII178","DII179",

"DIII180","DIII181","DIII182","DIII183","DIII184","DIII185","DIII186","DIII187","DIII188","DIII189",

"AVR190","AVR191","AVR192","AVR193","AVR194","AVR195","AVR196","AVR197","AVR198","AVR199",

"AVL200","AVL201","AVL202","AVL203","AVL204","AVL205","AVL206","AVL207","AVL208","AVL209",

"AVF210","AVF211","AVF212","AVF213","AVF214","AVF215","AVF216","AVF217","AVF218","AVF219",

"V1220","V1221","V1222","V1223","V1224","V1225","V1226","V1227","V1228","V1229",

"V2230","V2231","V2232","V2233","V2234","V2235","V2236","V2237","V2238","V2239",

"V3240","V3241","V3242","V3243","V3244","V3245","V3246","V3247","V3248","V3249",

"V4250","V4251","V4252","V4253","V4254","V4255","V4256","V4257","V4258","V4259",

"V5260","V5261","V5262","V5263","V5264","V5265","V5266","V5267","V5268","V5269",

"V6270","V6271","V6272","V6273","V6274","V6275","V6276","V6277","V6278","V6279","class"]

#将列名称添加到数据集

new_df.columns=final_df_columns

new_df.to_csv("new data with target class.csv")

new_df.head()

| Age | Sex | Height | Weight | QRS_Dur | P-R_Int | Q-T_Int | T_Int | P_Int | QRS | ... | V6271 | V6272 | V6273 | V6274 | V6275 | V6276 | V6277 | V6278 | V6279 | class | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 75.0 | 0.0 | 190.0 | 80.0 | 91.0 | 193.0 | 371.0 | 174.0 | 121.0 | -16.0 | ... | 0.0 | 9.0 | -0.9 | 0.0 | 0.0 | 0.9 | 2.9 | 23.3 | 49.4 | 8.0 |

| 1 | 56.0 | 1.0 | 165.0 | 64.0 | 81.0 | 174.0 | 401.0 | 149.0 | 39.0 | 25.0 | ... | 0.0 | 8.5 | 0.0 | 0.0 | 0.0 | 0.2 | 2.1 | 20.4 | 38.8 | 6.0 |

| 2 | 54.0 | 0.0 | 172.0 | 95.0 | 138.0 | 163.0 | 386.0 | 185.0 | 102.0 | 96.0 | ... | 0.0 | 9.5 | -2.4 | 0.0 | 0.0 | 0.3 | 3.4 | 12.3 | 49.0 | 10.0 |

| 3 | 55.0 | 0.0 | 175.0 | 94.0 | 100.0 | 202.0 | 380.0 | 179.0 | 143.0 | 28.0 | ... | 0.0 | 12.2 | -2.2 | 0.0 | 0.0 | 0.4 | 2.6 | 34.6 | 61.6 | 1.0 |

| 4 | 75.0 | 0.0 | 190.0 | 80.0 | 88.0 | 181.0 | 360.0 | 177.0 | 103.0 | -16.0 | ... | 0.0 | 13.1 | -3.6 | 0.0 | 0.0 | -0.1 | 3.9 | 25.4 | 62.8 | 7.0 |

5 rows × 279 columns

因为我们的数据框已完全清理和预处理。我们将删除目标属性并存储最终的数据帧。

target=new_df["class"]

final_df = new_df.drop(columns ="class")

2. 特征缩放和分割数据集¶

我们将使用 80% 的数据集用于训练目的,20% 用于测试目的。

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(final_df, target ,test_size=0.2, random_state=1)

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

scaler.fit(X_train)

X_train = scaler.transform(X_train)

X_test = scaler.transform(X_test)

import warnings

warnings.filterwarnings('ignore')

评价策略¶

由于因变量是分类变量,我们将使用分类模型。分类模型的最佳评估策略是比较精度和召回率。考虑分类评估指标、我们模型预测的重要性(我们不能接受有可能对健康人说您患有心律失常 (FN) 的结果)。

我们肯定会关注敏感性(被正确识别为患有该疾病的病人的百分比)而不是特异性(被正确识别为没有该疾病的健康人的百分比)。

# 导入评估指标。

from sklearn.metrics import r2_score,mean_squared_error,accuracy_score,recall_score,precision_score,confusion_matrix

3.模型选择¶

# 将存储每个模型的结果。

result = pd.DataFrame(columns=['Model','Train Accuracy','Test Accuracy'])

KNN分类器¶

from sklearn.neighbors import KNeighborsClassifier

knnclassifier = KNeighborsClassifier()

knnclassifier.fit(X_train, y_train)

y_pred = knnclassifier.predict(X_test)

knn_train_accuracy = accuracy_score(y_train, knnclassifier.predict(X_train))

knn_test_accuracy = accuracy_score(y_test, knnclassifier.predict(X_test))

result = result.append(pd.Series({'Model':'KNN Classifier','Train Accuracy':knn_train_accuracy,'Test Accuracy':knn_test_accuracy}),ignore_index=True)

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.65097 | 0.648352 |

逻辑回归¶

from sklearn.linear_model import LogisticRegression

lgclassifier = LogisticRegression(solver = 'saga',random_state = 0)

lgclassifier.fit(X_train, y_train)

y_pred = lgclassifier.predict(X_test)

lg_train_recall = recall_score(y_train, lgclassifier.predict(X_train),average='weighted')

lg_test_recall = recall_score(y_test, lgclassifier.predict(X_test),average='weighted')

lg_train_accuracy = accuracy_score(y_train, lgclassifier.predict(X_train))

lg_test_accuracy = accuracy_score(y_test, lgclassifier.predict(X_test))

result = result.append(pd.Series({'Model':'Logestic Regression','Train Accuracy':lg_train_accuracy,'Test Accuracy':lg_test_accuracy}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

决策树分类器¶

from sklearn.tree import DecisionTreeClassifier

dtclassifier = DecisionTreeClassifier(criterion = 'entropy', random_state = 0,max_depth=5)

dtclassifier.fit(X_train, y_train)

y_pred_test = dtclassifier.predict(X_test)

y_pred_train = dtclassifier.predict(X_train)

dt_train_accuracy = accuracy_score(y_train,y_pred_train )

dt_test_accuracy = accuracy_score(y_test, y_pred_test)

result = result.append(pd.Series({'Model':'Decision Tree Classifier','Train Accuracy':dt_train_accuracy,'Test Accuracy':dt_test_accuracy}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

线性支持向量机¶

from sklearn.svm import LinearSVC

lsvclassifier = LinearSVC(C=0.01)

lsvclassifier.fit(X_train, y_train)

y_pred_test = lsvclassifier.predict(X_test)

y_pred_train = lsvclassifier.predict(X_train)

lsvc_train_accuracy_score = accuracy_score(y_train, y_pred_train)

lsvc_test_accuracy_score = accuracy_score(y_test, y_pred_test)

result = result.append(pd.Series({'Model':'Linear SVC','Train Accuracy':lsvc_train_accuracy_score,'Test Accuracy':lsvc_test_accuracy_score}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

核化支持向量机¶

from sklearn import svm

KSVC_clf = svm.SVC(kernel='sigmoid',C=10,gamma=0.001)

KSVC_clf.fit(X_train, y_train)

y_pred_train = KSVC_clf.predict(X_train)

y_pred_test = KSVC_clf.predict(X_test)

ksvc_train_accuracy_score = accuracy_score(y_train, y_pred_train)

ksvc_test_accuracy_score = accuracy_score(y_test, y_pred_test)

result = result.append(pd.Series({'Model':'Kernelized SVC','Train Accuracy':ksvc_train_accuracy_score,'Test Accuracy':ksvc_test_accuracy_score}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

| 4 | Kernelized SVC | 0.847645 | 0.780220 |

随机森林分类器¶

from sklearn.ensemble import RandomForestClassifier

rf_clf = RandomForestClassifier(n_estimators=300, criterion='gini',max_features=100,max_depth=10,max_leaf_nodes=30)

rf_clf.fit(X_train, y_train)

RandomForestClassifier(max_depth=10, max_features=100, max_leaf_nodes=30,

n_estimators=300)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

RandomForestClassifier(max_depth=10, max_features=100, max_leaf_nodes=30,

n_estimators=300)y_pred_train = rf_clf.predict(X_train)

y_pred_test = rf_clf.predict(X_test)

rf_train_accuracy_score = accuracy_score(y_train, y_pred_train)

rf_test_accuracy_score = accuracy_score(y_test, y_pred_test)

result = result.append(pd.Series({'Model':'Random Forest Classifier','Train Accuracy':rf_train_accuracy_score,'Test Accuracy':rf_test_accuracy_score}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

| 4 | Kernelized SVC | 0.847645 | 0.780220 |

| 5 | Random Forest Classifier | 0.886427 | 0.747253 |

我们发现,在召回率方面最好的模型是核化 SVM,与其他模型相比,准确率达到 79.12。我们还发现逻辑回归具有更好的准确率。

4. 平衡数据集与PCA特征降维¶

由于我们的数据集不平衡,类别 7 和类别 8 的实例数量分别为 2,3,而类别 1 的实例数量为 245。因此,我们将尝试通过使用过采样对训练数据集随机重新采样来解决类别不平衡问题。

我们将使用 PCA(主成分分析)来减少采样数据集的维度,以获得最佳特征,从而获得更高的准确性。

随机过采样¶

#执行过采样

from imblearn.over_sampling import RandomOverSampler

ros = RandomOverSampler(random_state=0)

X_resampled, y_resampled = ros.fit_resample(final_df, target)

X_resampled.shape

(3185, 278)

#求各类别的频率

import collections

counter = collections.Counter(y_resampled)

counter

Counter({8.0: 245,

6.0: 245,

10.0: 245,

1.0: 245,

7.0: 245,

14.0: 245,

3.0: 245,

16.0: 245,

2.0: 245,

4.0: 245,

5.0: 245,

9.0: 245,

15.0: 245})

X_train1, X_test1, y_train1, y_test1 = train_test_split(X_resampled, y_resampled , test_size=0.2, random_state=1)

scaler = StandardScaler()

scaler.fit(X_train1)

X_train1 = scaler.transform(X_train1)

X_test1 = scaler.transform(X_test1)

主成分分析¶

from sklearn.decomposition import PCA

pca = PCA(.98)

pca.fit(X_train1)

pca.n_components_

99

X_train1 = pca.transform(X_train1)

X_test1 = pca.transform(X_test1)

KNN 与 PCA¶

classifier = KNeighborsClassifier()

classifier.fit(X_train1, y_train1)

Y_pred = classifier.predict(X_test1)

knnp_train_accuracy = accuracy_score(y_train1,classifier.predict(X_train1))

knnp_test_accuracy = accuracy_score(y_test1,Y_pred)

#print(knnp_train_accuracy,knnp_test_accuracy)

result = result.append(pd.Series({'Model':'Knn with PCA','Train Accuracy':knnp_train_accuracy,'Test Accuracy':knnp_test_accuracy}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

| 4 | Kernelized SVC | 0.847645 | 0.780220 |

| 5 | Random Forest Classifier | 0.886427 | 0.747253 |

| 6 | Knn with PCA | 0.967818 | 0.943485 |

使用 PCA 进行逻辑回归¶

from sklearn.linear_model import LogisticRegression

lgpclassifier = LogisticRegression(C=10,random_state = 0)

lgpclassifier.fit(X_train1, y_train1)

y_pred_train1 = lgpclassifier.predict(X_train1)

y_pred_test1 = lgpclassifier.predict(X_test1)

lgp_train_accuracy = accuracy_score(y_train1,y_pred_train1)

lgp_test_accuracy = accuracy_score(y_test1,y_pred_test1)

#print(lgp_train_accuracy,knnp_test_accuracy)

result = result.append(pd.Series({'Model':'Logestic Regression PCA','Train Accuracy':lgp_train_accuracy,'Test Accuracy':lgp_test_accuracy}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

| 4 | Kernelized SVC | 0.847645 | 0.780220 |

| 5 | Random Forest Classifier | 0.886427 | 0.747253 |

| 6 | Knn with PCA | 0.967818 | 0.943485 |

| 7 | Logestic Regression PCA | 0.999215 | 0.968603 |

使用 PCA 的决策树分类器¶

from sklearn.tree import DecisionTreeClassifier

dtpclassifier = DecisionTreeClassifier(criterion = 'entropy', random_state = 0)

dtpclassifier.fit(X_train1, y_train1)

y_pred_test = dtpclassifier.predict(X_test1)

y_pred_train = dtpclassifier.predict(X_train1)

dtp_train_recall_score = recall_score(y_train1, y_pred_train, average='weighted')

dtp_test_recall_score = recall_score(y_test1, y_pred_test, average='weighted')

dtp_train_accuracy_score = accuracy_score(y_train1, y_pred_train)

dtp_test_accuracy_score = accuracy_score(y_test1, y_pred_test)

result = result.append(pd.Series({'Model':'Decision Tree with PCA','Train Accuracy':dtp_train_accuracy_score,'Test Accuracy':dtp_test_accuracy_score}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

| 4 | Kernelized SVC | 0.847645 | 0.780220 |

| 5 | Random Forest Classifier | 0.886427 | 0.747253 |

| 6 | Knn with PCA | 0.967818 | 0.943485 |

| 7 | Logestic Regression PCA | 0.999215 | 0.968603 |

| 8 | Decision Tree with PCA | 1.000000 | 0.962323 |

带有 PCA 的线性 SVM¶

from sklearn.svm import SVC

classifier = SVC(kernel = 'linear', random_state = 0,probability=True)

classifier.fit(X_train1, y_train1)

y_pred = classifier.predict(X_test1)

#print("准确率得分",accuracy_score(y_test1,y_pred)*100)

lsvcp_train_accuracy = accuracy_score(y_train1,classifier.predict(X_train1))

lsvcp_test_accuracy = accuracy_score(y_test1,y_pred)

result = result.append(pd.Series({'Model':'Linear SVM with PCA','Train Accuracy':lsvcp_train_accuracy,'Test Accuracy':lsvcp_test_accuracy}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

| 4 | Kernelized SVC | 0.847645 | 0.780220 |

| 5 | Random Forest Classifier | 0.886427 | 0.747253 |

| 6 | Knn with PCA | 0.967818 | 0.943485 |

| 7 | Logestic Regression PCA | 0.999215 | 0.968603 |

| 8 | Decision Tree with PCA | 1.000000 | 0.962323 |

| 9 | Linear SVM with PCA | 0.999608 | 0.973312 |

带有 PCA 的核化 SVM¶

from sklearn import svm

KSVC_clf = svm.SVC(kernel='rbf',C=1,gamma=0.1)

KSVC_clf.fit(X_train1, y_train1)

y_pred_train1 = KSVC_clf.predict(X_train1)

y_pred_test1 = KSVC_clf.predict(X_test1)

ksvcp_train_accuracy_score = accuracy_score(y_train1, y_pred_train1)

ksvcp_test_accuracy_score = accuracy_score(y_test1, y_pred_test1)

result = result.append(pd.Series({'Model':'Kernelized SVM with PCA','Train Accuracy':ksvcp_train_accuracy_score,'Test Accuracy':ksvcp_test_accuracy_score}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

| 4 | Kernelized SVC | 0.847645 | 0.780220 |

| 5 | Random Forest Classifier | 0.886427 | 0.747253 |

| 6 | Knn with PCA | 0.967818 | 0.943485 |

| 7 | Logestic Regression PCA | 0.999215 | 0.968603 |

| 8 | Decision Tree with PCA | 1.000000 | 0.962323 |

| 9 | Linear SVM with PCA | 0.999608 | 0.973312 |

| 10 | Kernelized SVM with PCA | 1.000000 | 0.995290 |

使用 PCA 的随机森林¶

from sklearn.ensemble import RandomForestClassifier

rfp_clf = RandomForestClassifier()

rfp_clf.fit(X_train1, y_train1)

RandomForestClassifier()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

RandomForestClassifier()

y_pred_train1 = rfp_clf.predict(X_train1)

y_pred_test1 = rfp_clf.predict(X_test1)

rfp_train_accuracy_score = accuracy_score(y_train1, y_pred_train1)

rfp_test_accuracy_score = accuracy_score(y_test1, y_pred_test1)

result = result.append(pd.Series({'Model':'Random Forest with PCA','Train Accuracy':rfp_train_accuracy_score,'Test Accuracy':rfp_test_accuracy_score}),ignore_index=True )

result

| Model | Train Accuracy | Test Accuracy | |

|---|---|---|---|

| 0 | KNN Classifier | 0.650970 | 0.648352 |

| 1 | Logestic Regression | 0.939058 | 0.747253 |

| 2 | Decision Tree Classifier | 0.789474 | 0.681319 |

| 3 | Linear SVC | 0.880886 | 0.780220 |

| 4 | Kernelized SVC | 0.847645 | 0.780220 |

| 5 | Random Forest Classifier | 0.886427 | 0.747253 |

| 6 | Knn with PCA | 0.967818 | 0.943485 |

| 7 | Logestic Regression PCA | 0.999215 | 0.968603 |

| 8 | Decision Tree with PCA | 1.000000 | 0.962323 |

| 9 | Linear SVM with PCA | 0.999608 | 0.973312 |

| 10 | Kernelized SVM with PCA | 1.000000 | 0.995290 |

| 11 | Random Forest with PCA | 1.000000 | 0.995290 |

result.plot(kind="bar",figsize=(15,4))

plt.title('Train&Test Scores of Classifiers')

plt.xlabel('Models')

plt.ylabel('Scores')

plt.legend(loc=4 , bbox_to_anchor=(1.2, 0))

plt.show();

结果¶

在我们对重采样数据应用 PCA 后,模型开始表现得更好。这背后的原因是,PCA降低了数据的复杂性。它基于对具有大方差的变量的重视来创建组件,并且它创建的组件本质上是非共线的,这意味着它照顾大数据集中的共线性。 PCA 还提高了模型的整体执行时间和质量,当我们处理大量变量时,它非常有益。

最佳模型召回分数是带有 PCA 的 Kernalized SVM,准确度为 99.52%。

代码与数据集下载

作者

arwin.yu.98@gmail.com